66 changed files with 6421 additions and 0 deletions

Unified View

Diff Options

-

+114 -0FastSpeech/.gitignore

-

+21 -0FastSpeech/LICENSE

-

+68 -0FastSpeech/README.md

-

BINFastSpeech/alignments.zip

-

+4 -0FastSpeech/audio/__init__.py

-

+94 -0FastSpeech/audio/audio_processing.py

-

+8 -0FastSpeech/audio/hparams.py

-

+158 -0FastSpeech/audio/stft.py

-

+66 -0FastSpeech/audio/tools.py

-

+34 -0FastSpeech/data/ljspeech.py

-

+124 -0FastSpeech/dataset.py

-

+54 -0FastSpeech/fastspeech.py

-

+317 -0FastSpeech/glow.py

-

+52 -0FastSpeech/hparams.py

-

BINFastSpeech/img/model.png

-

BINFastSpeech/img/model_test.jpg

-

BINFastSpeech/img/tacotron2_outputs.jpg

-

+29 -0FastSpeech/loss.py

-

+404 -0FastSpeech/modules.py

-

+44 -0FastSpeech/optimizer.py

-

+61 -0FastSpeech/preprocess.py

-

BINFastSpeech/results/0.wav

-

BINFastSpeech/results/1.wav

-

BINFastSpeech/results/2.wav

-

+74 -0FastSpeech/synthesis.py

-

+3 -0FastSpeech/tacotron2/__init__.py

-

+92 -0FastSpeech/tacotron2/hparams.py

-

+36 -0FastSpeech/tacotron2/layers.py

-

+533 -0FastSpeech/tacotron2/model.py

-

+29 -0FastSpeech/tacotron2/utils.py

-

+75 -0FastSpeech/text/__init__.py

-

+89 -0FastSpeech/text/cleaners.py

-

+64 -0FastSpeech/text/cmudict.py

-

+71 -0FastSpeech/text/numbers.py

-

+19 -0FastSpeech/text/symbols.py

-

+194 -0FastSpeech/train.py

-

+100 -0FastSpeech/transformer/Beam.py

-

+9 -0FastSpeech/transformer/Constants.py

-

+230 -0FastSpeech/transformer/Layers.py

-

+145 -0FastSpeech/transformer/Models.py

-

+27 -0FastSpeech/transformer/Modules.py

-

+97 -0FastSpeech/transformer/SubLayers.py

-

+6 -0FastSpeech/transformer/__init__.py

-

+183 -0FastSpeech/utils.py

-

+3 -0FastSpeech/waveglow/__init__.py

-

+46 -0FastSpeech/waveglow/convert_model.py

-

+310 -0FastSpeech/waveglow/glow.py

-

+57 -0FastSpeech/waveglow/inference.py

-

+147 -0FastSpeech/waveglow/mel2samp.py

-

+129 -0SqueezeWave/README.md

-

+445 -0SqueezeWave/SqueezeWave_computational_complexity.ipynb

-

+80 -0SqueezeWave/TacotronSTFT.py

-

+93 -0SqueezeWave/audio_processing.py

-

+40 -0SqueezeWave/configs/config_a128_c128.json

-

+40 -0SqueezeWave/configs/config_a128_c256.json

-

+40 -0SqueezeWave/configs/config_a256_c128.json

-

+40 -0SqueezeWave/configs/config_a256_c256.json

-

+70 -0SqueezeWave/convert_model.py

-

+39 -0SqueezeWave/denoiser.py

-

+191 -0SqueezeWave/distributed.py

-

+328 -0SqueezeWave/glow.py

-

+87 -0SqueezeWave/inference.py

-

+150 -0SqueezeWave/mel2samp.py

-

+8 -0SqueezeWave/requirements.txt

-

+147 -0SqueezeWave/stft.py

-

+203 -0SqueezeWave/train.py

+ 114

- 0

FastSpeech/.gitignore

View File

| @ -0,0 +1,114 @@ | |||||

| # Byte-compiled / optimized / DLL files | |||||

| __pycache__/ | |||||

| *.py[cod] | |||||

| *$py.class | |||||

| # C extensions | |||||

| *.so | |||||

| # Distribution / packaging | |||||

| .Python | |||||

| build/ | |||||

| develop-eggs/ | |||||

| dist/ | |||||

| downloads/ | |||||

| eggs/ | |||||

| .eggs/ | |||||

| lib/ | |||||

| lib64/ | |||||

| parts/ | |||||

| sdist/ | |||||

| var/ | |||||

| wheels/ | |||||

| *.egg-info/ | |||||

| .installed.cfg | |||||

| *.egg | |||||

| MANIFEST | |||||

| # PyInstaller | |||||

| # Usually these files are written by a python script from a template | |||||

| # before PyInstaller builds the exe, so as to inject date/other infos into it. | |||||

| *.manifest | |||||

| *.spec | |||||

| # Installer logs | |||||

| pip-log.txt | |||||

| pip-delete-this-directory.txt | |||||

| # Unit test / coverage reports | |||||

| htmlcov/ | |||||

| .tox/ | |||||

| .coverage | |||||

| .coverage.* | |||||

| .cache | |||||

| nosetests.xml | |||||

| coverage.xml | |||||

| *.cover | |||||

| .hypothesis/ | |||||

| .pytest_cache/ | |||||

| # Translations | |||||

| *.mo | |||||

| *.pot | |||||

| # Django stuff: | |||||

| *.log | |||||

| local_settings.py | |||||

| db.sqlite3 | |||||

| # Flask stuff: | |||||

| instance/ | |||||

| .webassets-cache | |||||

| # Scrapy stuff: | |||||

| .scrapy | |||||

| # Sphinx documentation | |||||

| docs/_build/ | |||||

| # PyBuilder | |||||

| target/ | |||||

| # Jupyter Notebook | |||||

| .ipynb_checkpoints | |||||

| # pyenv | |||||

| .python-version | |||||

| # celery beat schedule file | |||||

| celerybeat-schedule | |||||

| # SageMath parsed files | |||||

| *.sage.py | |||||

| # Environments | |||||

| .env | |||||

| .venv | |||||

| env/ | |||||

| venv/ | |||||

| ENV/ | |||||

| env.bak/ | |||||

| venv.bak/ | |||||

| # Spyder project settings | |||||

| .spyderproject | |||||

| .spyproject | |||||

| # Rope project settings | |||||

| .ropeproject | |||||

| # mkdocs documentation | |||||

| /site | |||||

| # mypy | |||||

| .mypy_cache/ | |||||

| __pycache__ | |||||

| .vscode | |||||

| .DS_Store | |||||

| data/train.txt | |||||

| model_new/ | |||||

| mels/ | |||||

| alignments/ | |||||

+ 21

- 0

FastSpeech/LICENSE

View File

| @ -0,0 +1,21 @@ | |||||

| MIT License | |||||

| Copyright (c) 2019 Zhengxi Liu | |||||

| Permission is hereby granted, free of charge, to any person obtaining a copy | |||||

| of this software and associated documentation files (the "Software"), to deal | |||||

| in the Software without restriction, including without limitation the rights | |||||

| to use, copy, modify, merge, publish, distribute, sublicense, and/or sell | |||||

| copies of the Software, and to permit persons to whom the Software is | |||||

| furnished to do so, subject to the following conditions: | |||||

| The above copyright notice and this permission notice shall be included in all | |||||

| copies or substantial portions of the Software. | |||||

| THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR | |||||

| IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, | |||||

| FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE | |||||

| AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER | |||||

| LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, | |||||

| OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE | |||||

| SOFTWARE. | |||||

+ 68

- 0

FastSpeech/README.md

View File

| @ -0,0 +1,68 @@ | |||||

| # FastSpeech-Pytorch | |||||

| The Implementation of FastSpeech Based on Pytorch. | |||||

| ## Update | |||||

| ### 2019/10/23 | |||||

| 1. Fix bugs in alignment; | |||||

| 2. Fix bugs in transformer; | |||||

| 3. Fix bugs in LengthRegulator; | |||||

| 4. Change the way to process audio; | |||||

| 5. Use waveglow to synthesize. | |||||

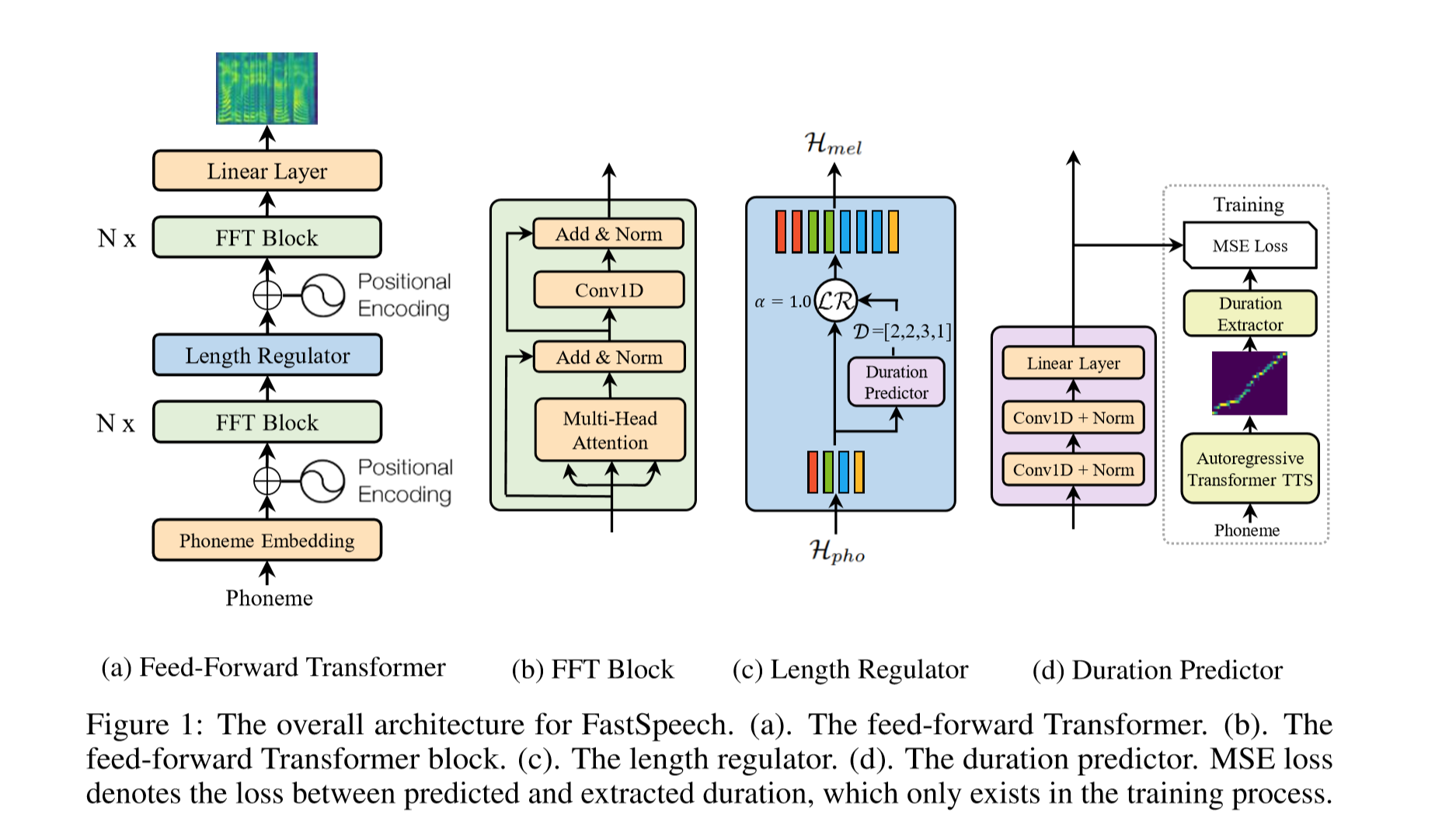

| ## Model | |||||

| <div align="center"> | |||||

| <img src="img/model.png" style="max-width:100%;"> | |||||

| </div> | |||||

| ## My Blog | |||||

| - [FastSpeech Reading Notes](https://zhuanlan.zhihu.com/p/67325775) | |||||

| - [Details and Rethinking of this Implementation](https://zhuanlan.zhihu.com/p/67939482) | |||||

| ## Start | |||||

| ### Dependencies | |||||

| - python 3.6 | |||||

| - CUDA 10.0 | |||||

| - pytorch==1.1.0 | |||||

| - nump==1.16.2 | |||||

| - scipy==1.2.1 | |||||

| - librosa==0.6.3 | |||||

| - inflect==2.1.0 | |||||

| - matplotlib==2.2.2 | |||||

| ### Prepare Dataset | |||||

| 1. Download and extract [LJSpeech dataset](https://keithito.com/LJ-Speech-Dataset/). | |||||

| 2. Put LJSpeech dataset in `data`. | |||||

| 3. Unzip `alignments.zip` \* | |||||

| 4. Put [Nvidia pretrained waveglow model](https://drive.google.com/file/d/1WsibBTsuRg_SF2Z6L6NFRTT-NjEy1oTx/view?usp=sharing) in the `waveglow/pretrained_model`; | |||||

| 5. Run `python preprocess.py`. | |||||

| *\* if you want to calculate alignment, don't unzip alignments.zip and put [Nvidia pretrained Tacotron2 model](https://drive.google.com/file/d/1c5ZTuT7J08wLUoVZ2KkUs_VdZuJ86ZqA/view?usp=sharing) in the `Tacotron2/pretrained_model`* | |||||

| ## Training | |||||

| Run `python train.py`. | |||||

| ## Test | |||||

| Run `python synthesis.py`. | |||||

| ## Pretrained Model | |||||

| - Baidu: [Step:112000](https://pan.baidu.com/s/1by3-8t3A6uihK8K9IFZ7rg) Enter Code: xpk7 | |||||

| - OneDrive: [Step:112000](https://1drv.ms/u/s!AuC2oR4FhoZ29kriYhuodY4-gPsT?e=zUIC8G) | |||||

| ## Notes | |||||

| - In the paper of FastSpeech, authors use pre-trained Transformer-TTS to provide the target of alignment. I didn't have a well-trained Transformer-TTS model so I use Tacotron2 instead. | |||||

| - The examples of audio are in `results`. | |||||

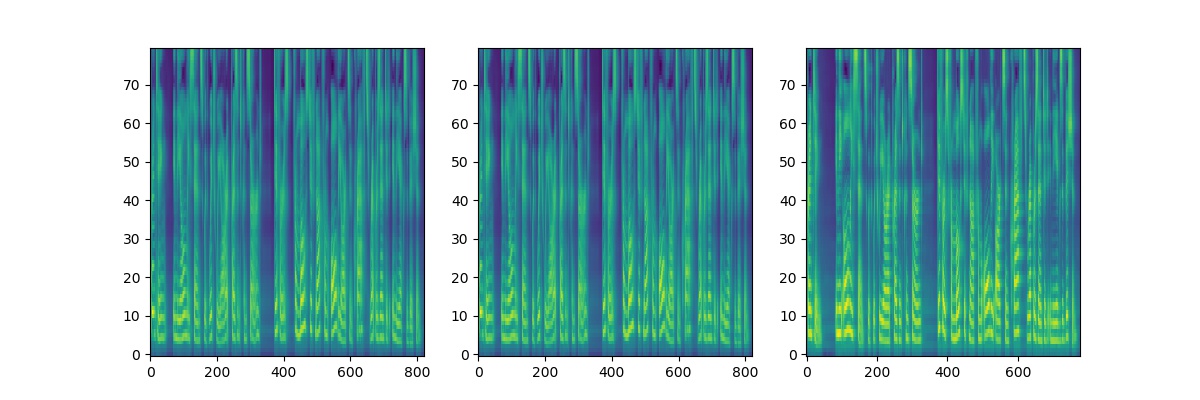

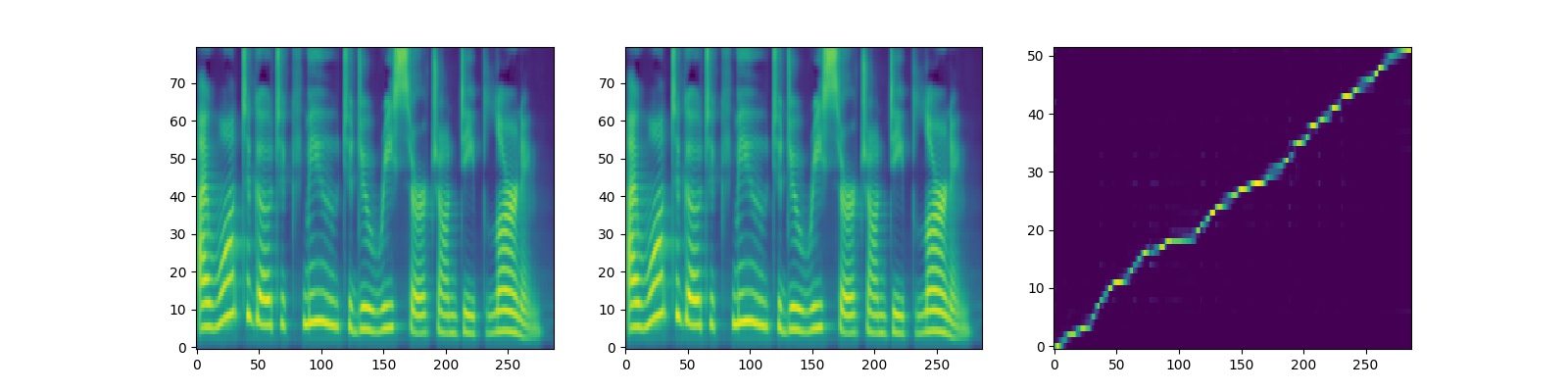

| - The outputs and alignment of Tacotron2 are shown as follows (The sentence for synthesizing is "I want to go to CMU to do research on deep learning."): | |||||

| <div align="center"> | |||||

| <img src="img/tacotron2_outputs.jpg" style="max-width:100%;"> | |||||

| </div> | |||||

| - The outputs of FastSpeech and Tacotron2 (Right one is tacotron2) are shown as follows (The sentence for synthesizing is "Printing, in the only sense with which we are at present concerned, differs from most if not from all the arts and crafts represented in the Exhibition."): | |||||

| <div align="center"> | |||||

| <img src="img/model_test.jpg" style="max-width:100%;"> | |||||

| </div> | |||||

| ## Reference | |||||

| - [The Implementation of Tacotron Based on Tensorflow](https://github.com/keithito/tacotron) | |||||

| - [The Implementation of Transformer Based on Pytorch](https://github.com/jadore801120/attention-is-all-you-need-pytorch) | |||||

| - [The Implementation of Transformer-TTS Based on Pytorch](https://github.com/xcmyz/Transformer-TTS) | |||||

| - [The Implementation of Tacotron2 Based on Pytorch](https://github.com/NVIDIA/tacotron2) | |||||

BIN

FastSpeech/alignments.zip

View File

+ 4

- 0

FastSpeech/audio/__init__.py

View File

| @ -0,0 +1,4 @@ | |||||

| import audio.hparams | |||||

| import audio.tools | |||||

| import audio.stft | |||||

| import audio.audio_processing | |||||

+ 94

- 0

FastSpeech/audio/audio_processing.py

View File

| @ -0,0 +1,94 @@ | |||||

| import torch | |||||

| import numpy as np | |||||

| from scipy.signal import get_window | |||||

| import librosa.util as librosa_util | |||||

| def window_sumsquare(window, n_frames, hop_length=200, win_length=800, | |||||

| n_fft=800, dtype=np.float32, norm=None): | |||||

| """ | |||||

| # from librosa 0.6 | |||||

| Compute the sum-square envelope of a window function at a given hop length. | |||||

| This is used to estimate modulation effects induced by windowing | |||||

| observations in short-time fourier transforms. | |||||

| Parameters | |||||

| ---------- | |||||

| window : string, tuple, number, callable, or list-like | |||||

| Window specification, as in `get_window` | |||||

| n_frames : int > 0 | |||||

| The number of analysis frames | |||||

| hop_length : int > 0 | |||||

| The number of samples to advance between frames | |||||

| win_length : [optional] | |||||

| The length of the window function. By default, this matches `n_fft`. | |||||

| n_fft : int > 0 | |||||

| The length of each analysis frame. | |||||

| dtype : np.dtype | |||||

| The data type of the output | |||||

| Returns | |||||

| ------- | |||||

| wss : np.ndarray, shape=`(n_fft + hop_length * (n_frames - 1))` | |||||

| The sum-squared envelope of the window function | |||||

| """ | |||||

| if win_length is None: | |||||

| win_length = n_fft | |||||

| n = n_fft + hop_length * (n_frames - 1) | |||||

| x = np.zeros(n, dtype=dtype) | |||||

| # Compute the squared window at the desired length | |||||

| win_sq = get_window(window, win_length, fftbins=True) | |||||

| win_sq = librosa_util.normalize(win_sq, norm=norm)**2 | |||||

| win_sq = librosa_util.pad_center(win_sq, n_fft) | |||||

| # Fill the envelope | |||||

| for i in range(n_frames): | |||||

| sample = i * hop_length | |||||

| x[sample:min(n, sample + n_fft) | |||||

| ] += win_sq[:max(0, min(n_fft, n - sample))] | |||||

| return x | |||||

| def griffin_lim(magnitudes, stft_fn, n_iters=30): | |||||

| """ | |||||

| PARAMS | |||||

| ------ | |||||

| magnitudes: spectrogram magnitudes | |||||

| stft_fn: STFT class with transform (STFT) and inverse (ISTFT) methods | |||||

| """ | |||||

| angles = np.angle(np.exp(2j * np.pi * np.random.rand(*magnitudes.size()))) | |||||

| angles = angles.astype(np.float32) | |||||

| angles = torch.autograd.Variable(torch.from_numpy(angles)) | |||||

| signal = stft_fn.inverse(magnitudes, angles).squeeze(1) | |||||

| for i in range(n_iters): | |||||

| _, angles = stft_fn.transform(signal) | |||||

| signal = stft_fn.inverse(magnitudes, angles).squeeze(1) | |||||

| return signal | |||||

| def dynamic_range_compression(x, C=1, clip_val=1e-5): | |||||

| """ | |||||

| PARAMS | |||||

| ------ | |||||

| C: compression factor | |||||

| """ | |||||

| return torch.log(torch.clamp(x, min=clip_val) * C) | |||||

| def dynamic_range_decompression(x, C=1): | |||||

| """ | |||||

| PARAMS | |||||

| ------ | |||||

| C: compression factor used to compress | |||||

| """ | |||||

| return torch.exp(x) / C | |||||

+ 8

- 0

FastSpeech/audio/hparams.py

View File

| @ -0,0 +1,8 @@ | |||||

| max_wav_value = 32768.0 | |||||

| sampling_rate = 22050 | |||||

| filter_length = 1024 | |||||

| hop_length = 256 | |||||

| win_length = 1024 | |||||

| n_mel_channels = 80 | |||||

| mel_fmin = 0.0 | |||||

| mel_fmax = 8000.0 | |||||

+ 158

- 0

FastSpeech/audio/stft.py

View File

| @ -0,0 +1,158 @@ | |||||

| import torch | |||||

| import torch.nn.functional as F | |||||

| from torch.autograd import Variable | |||||

| import numpy as np | |||||

| from scipy.signal import get_window | |||||

| from librosa.util import pad_center, tiny | |||||

| from librosa.filters import mel as librosa_mel_fn | |||||

| from audio.audio_processing import dynamic_range_compression | |||||

| from audio.audio_processing import dynamic_range_decompression | |||||

| from audio.audio_processing import window_sumsquare | |||||

| class STFT(torch.nn.Module): | |||||

| """adapted from Prem Seetharaman's https://github.com/pseeth/pytorch-stft""" | |||||

| def __init__(self, filter_length=800, hop_length=200, win_length=800, | |||||

| window='hann'): | |||||

| super(STFT, self).__init__() | |||||

| self.filter_length = filter_length | |||||

| self.hop_length = hop_length | |||||

| self.win_length = win_length | |||||

| self.window = window | |||||

| self.forward_transform = None | |||||

| scale = self.filter_length / self.hop_length | |||||

| fourier_basis = np.fft.fft(np.eye(self.filter_length)) | |||||

| cutoff = int((self.filter_length / 2 + 1)) | |||||

| fourier_basis = np.vstack([np.real(fourier_basis[:cutoff, :]), | |||||

| np.imag(fourier_basis[:cutoff, :])]) | |||||

| forward_basis = torch.FloatTensor(fourier_basis[:, None, :]) | |||||

| inverse_basis = torch.FloatTensor( | |||||

| np.linalg.pinv(scale * fourier_basis).T[:, None, :]) | |||||

| if window is not None: | |||||

| assert(filter_length >= win_length) | |||||

| # get window and zero center pad it to filter_length | |||||

| fft_window = get_window(window, win_length, fftbins=True) | |||||

| fft_window = pad_center(fft_window, filter_length) | |||||

| fft_window = torch.from_numpy(fft_window).float() | |||||

| # window the bases | |||||

| forward_basis *= fft_window | |||||

| inverse_basis *= fft_window | |||||

| self.register_buffer('forward_basis', forward_basis.float()) | |||||

| self.register_buffer('inverse_basis', inverse_basis.float()) | |||||

| def transform(self, input_data): | |||||

| num_batches = input_data.size(0) | |||||

| num_samples = input_data.size(1) | |||||

| self.num_samples = num_samples | |||||

| # similar to librosa, reflect-pad the input | |||||

| input_data = input_data.view(num_batches, 1, num_samples) | |||||

| input_data = F.pad( | |||||

| input_data.unsqueeze(1), | |||||

| (int(self.filter_length / 2), int(self.filter_length / 2), 0, 0), | |||||

| mode='reflect') | |||||

| input_data = input_data.squeeze(1) | |||||

| forward_transform = F.conv1d( | |||||

| input_data.cuda(), | |||||

| Variable(self.forward_basis, requires_grad=False).cuda(), | |||||

| stride=self.hop_length, | |||||

| padding=0).cpu() | |||||

| cutoff = int((self.filter_length / 2) + 1) | |||||

| real_part = forward_transform[:, :cutoff, :] | |||||

| imag_part = forward_transform[:, cutoff:, :] | |||||

| magnitude = torch.sqrt(real_part**2 + imag_part**2) | |||||

| phase = torch.autograd.Variable( | |||||

| torch.atan2(imag_part.data, real_part.data)) | |||||

| return magnitude, phase | |||||

| def inverse(self, magnitude, phase): | |||||

| recombine_magnitude_phase = torch.cat( | |||||

| [magnitude*torch.cos(phase), magnitude*torch.sin(phase)], dim=1) | |||||

| inverse_transform = F.conv_transpose1d( | |||||

| recombine_magnitude_phase, | |||||

| Variable(self.inverse_basis, requires_grad=False), | |||||

| stride=self.hop_length, | |||||

| padding=0) | |||||

| if self.window is not None: | |||||

| window_sum = window_sumsquare( | |||||

| self.window, magnitude.size(-1), hop_length=self.hop_length, | |||||

| win_length=self.win_length, n_fft=self.filter_length, | |||||

| dtype=np.float32) | |||||

| # remove modulation effects | |||||

| approx_nonzero_indices = torch.from_numpy( | |||||

| np.where(window_sum > tiny(window_sum))[0]) | |||||

| window_sum = torch.autograd.Variable( | |||||

| torch.from_numpy(window_sum), requires_grad=False) | |||||

| window_sum = window_sum.cuda() if magnitude.is_cuda else window_sum | |||||

| inverse_transform[:, :, | |||||

| approx_nonzero_indices] /= window_sum[approx_nonzero_indices] | |||||

| # scale by hop ratio | |||||

| inverse_transform *= float(self.filter_length) / self.hop_length | |||||

| inverse_transform = inverse_transform[:, :, int(self.filter_length/2):] | |||||

| inverse_transform = inverse_transform[:, | |||||

| :, :-int(self.filter_length/2):] | |||||

| return inverse_transform | |||||

| def forward(self, input_data): | |||||

| self.magnitude, self.phase = self.transform(input_data) | |||||

| reconstruction = self.inverse(self.magnitude, self.phase) | |||||

| return reconstruction | |||||

| class TacotronSTFT(torch.nn.Module): | |||||

| def __init__(self, filter_length=1024, hop_length=256, win_length=1024, | |||||

| n_mel_channels=80, sampling_rate=22050, mel_fmin=0.0, | |||||

| mel_fmax=8000.0): | |||||

| super(TacotronSTFT, self).__init__() | |||||

| self.n_mel_channels = n_mel_channels | |||||

| self.sampling_rate = sampling_rate | |||||

| self.stft_fn = STFT(filter_length, hop_length, win_length) | |||||

| mel_basis = librosa_mel_fn( | |||||

| sampling_rate, filter_length, n_mel_channels, mel_fmin, mel_fmax) | |||||

| mel_basis = torch.from_numpy(mel_basis).float() | |||||

| self.register_buffer('mel_basis', mel_basis) | |||||

| def spectral_normalize(self, magnitudes): | |||||

| output = dynamic_range_compression(magnitudes) | |||||

| return output | |||||

| def spectral_de_normalize(self, magnitudes): | |||||

| output = dynamic_range_decompression(magnitudes) | |||||

| return output | |||||

| def mel_spectrogram(self, y): | |||||

| """Computes mel-spectrograms from a batch of waves | |||||

| PARAMS | |||||

| ------ | |||||

| y: Variable(torch.FloatTensor) with shape (B, T) in range [-1, 1] | |||||

| RETURNS | |||||

| ------- | |||||

| mel_output: torch.FloatTensor of shape (B, n_mel_channels, T) | |||||

| """ | |||||

| assert(torch.min(y.data) >= -1) | |||||

| assert(torch.max(y.data) <= 1) | |||||

| magnitudes, phases = self.stft_fn.transform(y) | |||||

| magnitudes = magnitudes.data | |||||

| mel_output = torch.matmul(self.mel_basis, magnitudes) | |||||

| mel_output = self.spectral_normalize(mel_output) | |||||

| return mel_output | |||||

+ 66

- 0

FastSpeech/audio/tools.py

View File

| @ -0,0 +1,66 @@ | |||||

| import torch | |||||

| import numpy as np | |||||

| from scipy.io.wavfile import read | |||||

| from scipy.io.wavfile import write | |||||

| import audio.stft as stft | |||||

| import audio.hparams as hparams | |||||

| from audio.audio_processing import griffin_lim | |||||

| _stft = stft.TacotronSTFT( | |||||

| hparams.filter_length, hparams.hop_length, hparams.win_length, | |||||

| hparams.n_mel_channels, hparams.sampling_rate, hparams.mel_fmin, | |||||

| hparams.mel_fmax) | |||||

| def load_wav_to_torch(full_path): | |||||

| sampling_rate, data = read(full_path) | |||||

| return torch.FloatTensor(data.astype(np.float32)), sampling_rate | |||||

| def get_mel(filename): | |||||

| audio, sampling_rate = load_wav_to_torch(filename) | |||||

| if sampling_rate != _stft.sampling_rate: | |||||

| raise ValueError("{} {} SR doesn't match target {} SR".format( | |||||

| sampling_rate, _stft.sampling_rate)) | |||||

| audio_norm = audio / hparams.max_wav_value | |||||

| audio_norm = audio_norm.unsqueeze(0) | |||||

| audio_norm = torch.autograd.Variable(audio_norm, requires_grad=False) | |||||

| melspec = _stft.mel_spectrogram(audio_norm) | |||||

| melspec = torch.squeeze(melspec, 0) | |||||

| # melspec = torch.from_numpy(_normalize(melspec.numpy())) | |||||

| return melspec | |||||

| def get_mel_from_wav(audio): | |||||

| sampling_rate = hparams.sampling_rate | |||||

| if sampling_rate != _stft.sampling_rate: | |||||

| raise ValueError("{} {} SR doesn't match target {} SR".format( | |||||

| sampling_rate, _stft.sampling_rate)) | |||||

| audio_norm = audio / hparams.max_wav_value | |||||

| audio_norm = audio_norm.unsqueeze(0) | |||||

| audio_norm = torch.autograd.Variable(audio_norm, requires_grad=False) | |||||

| melspec = _stft.mel_spectrogram(audio_norm) | |||||

| melspec = torch.squeeze(melspec, 0) | |||||

| return melspec | |||||

| def inv_mel_spec(mel, out_filename, griffin_iters=60): | |||||

| mel = torch.stack([mel]) | |||||

| # mel = torch.stack([torch.from_numpy(_denormalize(mel.numpy()))]) | |||||

| mel_decompress = _stft.spectral_de_normalize(mel) | |||||

| mel_decompress = mel_decompress.transpose(1, 2).data.cpu() | |||||

| spec_from_mel_scaling = 1000 | |||||

| spec_from_mel = torch.mm(mel_decompress[0], _stft.mel_basis) | |||||

| spec_from_mel = spec_from_mel.transpose(0, 1).unsqueeze(0) | |||||

| spec_from_mel = spec_from_mel * spec_from_mel_scaling | |||||

| audio = griffin_lim(torch.autograd.Variable( | |||||

| spec_from_mel[:, :, :-1]), _stft.stft_fn, griffin_iters) | |||||

| audio = audio.squeeze() | |||||

| audio = audio.cpu().numpy() | |||||

| audio_path = out_filename | |||||

| write(audio_path, hparams.sampling_rate, audio) | |||||

+ 34

- 0

FastSpeech/data/ljspeech.py

View File

| @ -0,0 +1,34 @@ | |||||

| import numpy as np | |||||

| import os | |||||

| import audio as Audio | |||||

| def build_from_path(in_dir, out_dir): | |||||

| index = 1 | |||||

| out = list() | |||||

| with open(os.path.join(in_dir, 'metadata.csv'), encoding='utf-8') as f: | |||||

| for line in f: | |||||

| parts = line.strip().split('|') | |||||

| wav_path = os.path.join(in_dir, 'wavs', '%s.wav' % parts[0]) | |||||

| text = parts[2] | |||||

| out.append(_process_utterance(out_dir, index, wav_path, text)) | |||||

| if index % 100 == 0: | |||||

| print("Done %d" % index) | |||||

| index = index + 1 | |||||

| return out | |||||

| def _process_utterance(out_dir, index, wav_path, text): | |||||

| # Compute a mel-scale spectrogram from the wav: | |||||

| mel_spectrogram = Audio.tools.get_mel(wav_path).numpy().astype(np.float32) | |||||

| # print(mel_spectrogram) | |||||

| # Write the spectrograms to disk: | |||||

| mel_filename = 'ljspeech-mel-%05d.npy' % index | |||||

| np.save(os.path.join(out_dir, mel_filename), | |||||

| mel_spectrogram.T, allow_pickle=False) | |||||

| return text | |||||

+ 124

- 0

FastSpeech/dataset.py

View File

| @ -0,0 +1,124 @@ | |||||

| import torch | |||||

| from torch.nn import functional as F | |||||

| from torch.utils.data import Dataset, DataLoader | |||||

| import numpy as np | |||||

| import math | |||||

| import os | |||||

| import hparams | |||||

| import audio as Audio | |||||

| from text import text_to_sequence | |||||

| from utils import process_text, pad_1D, pad_2D | |||||

| device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') | |||||

| class FastSpeechDataset(Dataset): | |||||

| """ LJSpeech """ | |||||

| def __init__(self): | |||||

| self.text = process_text(os.path.join("data", "train.txt")) | |||||

| def __len__(self): | |||||

| return len(self.text) | |||||

| def __getitem__(self, idx): | |||||

| mel_gt_name = os.path.join( | |||||

| hparams.mel_ground_truth, "ljspeech-mel-%05d.npy" % (idx+1)) | |||||

| mel_gt_target = np.load(mel_gt_name) | |||||

| D = np.load(os.path.join(hparams.alignment_path, str(idx)+".npy")) | |||||

| character = self.text[idx][0:len(self.text[idx])-1] | |||||

| character = np.array(text_to_sequence( | |||||

| character, hparams.text_cleaners)) | |||||

| sample = {"text": character, | |||||

| "mel_target": mel_gt_target, | |||||

| "D": D} | |||||

| return sample | |||||

| def reprocess(batch, cut_list): | |||||

| texts = [batch[ind]["text"] for ind in cut_list] | |||||

| mel_targets = [batch[ind]["mel_target"] for ind in cut_list] | |||||

| Ds = [batch[ind]["D"] for ind in cut_list] | |||||

| length_text = np.array([]) | |||||

| for text in texts: | |||||

| length_text = np.append(length_text, text.shape[0]) | |||||

| src_pos = list() | |||||

| max_len = int(max(length_text)) | |||||

| for length_src_row in length_text: | |||||

| src_pos.append(np.pad([i+1 for i in range(int(length_src_row))], | |||||

| (0, max_len-int(length_src_row)), 'constant')) | |||||

| src_pos = np.array(src_pos) | |||||

| length_mel = np.array(list()) | |||||

| for mel in mel_targets: | |||||

| length_mel = np.append(length_mel, mel.shape[0]) | |||||

| mel_pos = list() | |||||

| max_mel_len = int(max(length_mel)) | |||||

| for length_mel_row in length_mel: | |||||

| mel_pos.append(np.pad([i+1 for i in range(int(length_mel_row))], | |||||

| (0, max_mel_len-int(length_mel_row)), 'constant')) | |||||

| mel_pos = np.array(mel_pos) | |||||

| texts = pad_1D(texts) | |||||

| Ds = pad_1D(Ds) | |||||

| mel_targets = pad_2D(mel_targets) | |||||

| out = {"text": texts, | |||||

| "mel_target": mel_targets, | |||||

| "D": Ds, | |||||

| "mel_pos": mel_pos, | |||||

| "src_pos": src_pos, | |||||

| "mel_max_len": max_mel_len} | |||||

| return out | |||||

| def collate_fn(batch): | |||||

| len_arr = np.array([d["text"].shape[0] for d in batch]) | |||||

| index_arr = np.argsort(-len_arr) | |||||

| batchsize = len(batch) | |||||

| real_batchsize = int(math.sqrt(batchsize)) | |||||

| cut_list = list() | |||||

| for i in range(real_batchsize): | |||||

| cut_list.append(index_arr[i*real_batchsize:(i+1)*real_batchsize]) | |||||

| output = list() | |||||

| for i in range(real_batchsize): | |||||

| output.append(reprocess(batch, cut_list[i])) | |||||

| return output | |||||

| if __name__ == "__main__": | |||||

| # Test | |||||

| dataset = FastSpeechDataset() | |||||

| training_loader = DataLoader(dataset, | |||||

| batch_size=1, | |||||

| shuffle=False, | |||||

| collate_fn=collate_fn, | |||||

| drop_last=True, | |||||

| num_workers=0) | |||||

| total_step = hparams.epochs * len(training_loader) * hparams.batch_size | |||||

| cnt = 0 | |||||

| for i, batchs in enumerate(training_loader): | |||||

| for j, data_of_batch in enumerate(batchs): | |||||

| mel_target = torch.from_numpy( | |||||

| data_of_batch["mel_target"]).float().to(device) | |||||

| D = torch.from_numpy(data_of_batch["D"]).int().to(device) | |||||

| # print(mel_target.size()) | |||||

| # print(D.sum()) | |||||

| print(cnt) | |||||

| if mel_target.size(1) == D.sum().item(): | |||||

| cnt += 1 | |||||

| print(cnt) | |||||

+ 54

- 0

FastSpeech/fastspeech.py

View File

| @ -0,0 +1,54 @@ | |||||

| import torch | |||||

| import torch.nn as nn | |||||

| from transformer.Models import Encoder, Decoder | |||||

| from transformer.Layers import Linear, PostNet | |||||

| from modules import LengthRegulator | |||||

| import hparams as hp | |||||

| device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') | |||||

| class FastSpeech(nn.Module): | |||||

| """ FastSpeech """ | |||||

| def __init__(self): | |||||

| super(FastSpeech, self).__init__() | |||||

| self.encoder = Encoder() | |||||

| self.length_regulator = LengthRegulator() | |||||

| self.decoder = Decoder() | |||||

| self.mel_linear = Linear(hp.decoder_output_size, hp.num_mels) | |||||

| self.postnet = PostNet() | |||||

| def forward(self, src_seq, src_pos, mel_pos=None, mel_max_length=None, length_target=None, alpha=1.0): | |||||

| encoder_output, _ = self.encoder(src_seq, src_pos) | |||||

| if self.training: | |||||

| length_regulator_output, duration_predictor_output = self.length_regulator(encoder_output, | |||||

| target=length_target, | |||||

| alpha=alpha, | |||||

| mel_max_length=mel_max_length) | |||||

| decoder_output = self.decoder(length_regulator_output, mel_pos) | |||||

| mel_output = self.mel_linear(decoder_output) | |||||

| mel_output_postnet = self.postnet(mel_output) + mel_output | |||||

| return mel_output, mel_output_postnet, duration_predictor_output | |||||

| else: | |||||

| length_regulator_output, decoder_pos = self.length_regulator(encoder_output, | |||||

| alpha=alpha) | |||||

| decoder_output = self.decoder(length_regulator_output, decoder_pos) | |||||

| mel_output = self.mel_linear(decoder_output) | |||||

| mel_output_postnet = self.postnet(mel_output) + mel_output | |||||

| return mel_output, mel_output_postnet | |||||

| if __name__ == "__main__": | |||||

| # Test | |||||

| model = FastSpeech() | |||||

| print(sum(param.numel() for param in model.parameters())) | |||||

+ 317

- 0

FastSpeech/glow.py

View File

| @ -0,0 +1,317 @@ | |||||

| # ***************************************************************************** | |||||

| # Copyright (c) 2018, NVIDIA CORPORATION. All rights reserved. | |||||

| # | |||||

| # Redistribution and use in source and binary forms, with or without | |||||

| # modification, are permitted provided that the following conditions are met: | |||||

| # * Redistributions of source code must retain the above copyright | |||||

| # notice, this list of conditions and the following disclaimer. | |||||

| # * Redistributions in binary form must reproduce the above copyright | |||||

| # notice, this list of conditions and the following disclaimer in the | |||||

| # documentation and/or other materials provided with the distribution. | |||||

| # * Neither the name of the NVIDIA CORPORATION nor the | |||||

| # names of its contributors may be used to endorse or promote products | |||||

| # derived from this software without specific prior written permission. | |||||

| # | |||||

| # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND | |||||

| # ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED | |||||

| # WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE | |||||

| # DISCLAIMED. IN NO EVENT SHALL NVIDIA CORPORATION BE LIABLE FOR ANY | |||||

| # DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES | |||||

| # (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; | |||||

| # LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND | |||||

| # ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT | |||||

| # (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS | |||||

| # SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. | |||||

| # | |||||

| # ***************************************************************************** | |||||

| import copy | |||||

| import torch | |||||

| from torch.autograd import Variable | |||||

| import torch.nn.functional as F | |||||

| @torch.jit.script | |||||

| def fused_add_tanh_sigmoid_multiply(input_a, input_b, n_channels): | |||||

| n_channels_int = n_channels[0] | |||||

| in_act = input_a+input_b | |||||

| t_act = torch.nn.functional.tanh(in_act[:, :n_channels_int, :]) | |||||

| s_act = torch.nn.functional.sigmoid(in_act[:, n_channels_int:, :]) | |||||

| acts = t_act * s_act | |||||

| return acts | |||||

| class WaveGlowLoss(torch.nn.Module): | |||||

| def __init__(self, sigma=1.0): | |||||

| super(WaveGlowLoss, self).__init__() | |||||

| self.sigma = sigma | |||||

| def forward(self, model_output): | |||||

| z, log_s_list, log_det_W_list = model_output | |||||

| for i, log_s in enumerate(log_s_list): | |||||

| if i == 0: | |||||

| log_s_total = torch.sum(log_s) | |||||

| log_det_W_total = log_det_W_list[i] | |||||

| else: | |||||

| log_s_total = log_s_total + torch.sum(log_s) | |||||

| log_det_W_total += log_det_W_list[i] | |||||

| loss = torch.sum(z*z)/(2*self.sigma*self.sigma) - \ | |||||

| log_s_total - log_det_W_total | |||||

| return loss/(z.size(0)*z.size(1)*z.size(2)) | |||||

| class Invertible1x1Conv(torch.nn.Module): | |||||

| """ | |||||

| The layer outputs both the convolution, and the log determinant | |||||

| of its weight matrix. If reverse=True it does convolution with | |||||

| inverse | |||||

| """ | |||||

| def __init__(self, c): | |||||

| super(Invertible1x1Conv, self).__init__() | |||||

| self.conv = torch.nn.Conv1d(c, c, kernel_size=1, stride=1, padding=0, | |||||

| bias=False) | |||||

| # Sample a random orthonormal matrix to initialize weights | |||||

| W = torch.qr(torch.FloatTensor(c, c).normal_())[0] | |||||

| # Ensure determinant is 1.0 not -1.0 | |||||

| if torch.det(W) < 0: | |||||

| W[:, 0] = -1*W[:, 0] | |||||

| W = W.view(c, c, 1) | |||||

| self.conv.weight.data = W | |||||

| def forward(self, z, reverse=False): | |||||

| # shape | |||||

| batch_size, group_size, n_of_groups = z.size() | |||||

| W = self.conv.weight.squeeze() | |||||

| if reverse: | |||||

| if not hasattr(self, 'W_inverse'): | |||||

| # Reverse computation | |||||

| W_inverse = W.inverse() | |||||

| W_inverse = Variable(W_inverse[..., None]) | |||||

| if z.type() == 'torch.cuda.HalfTensor': | |||||

| W_inverse = W_inverse.half() | |||||

| self.W_inverse = W_inverse | |||||

| z = F.conv1d(z, self.W_inverse, bias=None, stride=1, padding=0) | |||||

| return z | |||||

| else: | |||||

| # Forward computation | |||||

| log_det_W = batch_size * n_of_groups * torch.logdet(W) | |||||

| z = self.conv(z) | |||||

| return z, log_det_W | |||||

| class WN(torch.nn.Module): | |||||

| """ | |||||

| This is the WaveNet like layer for the affine coupling. The primary difference | |||||

| from WaveNet is the convolutions need not be causal. There is also no dilation | |||||

| size reset. The dilation only doubles on each layer | |||||

| """ | |||||

| def __init__(self, n_in_channels, n_mel_channels, n_layers, n_channels, | |||||

| kernel_size): | |||||

| super(WN, self).__init__() | |||||

| assert(kernel_size % 2 == 1) | |||||

| assert(n_channels % 2 == 0) | |||||

| self.n_layers = n_layers | |||||

| self.n_channels = n_channels | |||||

| self.in_layers = torch.nn.ModuleList() | |||||

| self.res_skip_layers = torch.nn.ModuleList() | |||||

| self.cond_layers = torch.nn.ModuleList() | |||||

| start = torch.nn.Conv1d(n_in_channels, n_channels, 1) | |||||

| start = torch.nn.utils.weight_norm(start, name='weight') | |||||

| self.start = start | |||||

| # Initializing last layer to 0 makes the affine coupling layers | |||||

| # do nothing at first. This helps with training stability | |||||

| end = torch.nn.Conv1d(n_channels, 2*n_in_channels, 1) | |||||

| end.weight.data.zero_() | |||||

| end.bias.data.zero_() | |||||

| self.end = end | |||||

| for i in range(n_layers): | |||||

| dilation = 2 ** i | |||||

| padding = int((kernel_size*dilation - dilation)/2) | |||||

| in_layer = torch.nn.Conv1d(n_channels, 2*n_channels, kernel_size, | |||||

| dilation=dilation, padding=padding) | |||||

| in_layer = torch.nn.utils.weight_norm(in_layer, name='weight') | |||||

| self.in_layers.append(in_layer) | |||||

| cond_layer = torch.nn.Conv1d(n_mel_channels, 2*n_channels, 1) | |||||

| cond_layer = torch.nn.utils.weight_norm(cond_layer, name='weight') | |||||

| self.cond_layers.append(cond_layer) | |||||

| # last one is not necessary | |||||

| if i < n_layers - 1: | |||||

| res_skip_channels = 2*n_channels | |||||

| else: | |||||

| res_skip_channels = n_channels | |||||

| res_skip_layer = torch.nn.Conv1d(n_channels, res_skip_channels, 1) | |||||

| res_skip_layer = torch.nn.utils.weight_norm( | |||||

| res_skip_layer, name='weight') | |||||

| self.res_skip_layers.append(res_skip_layer) | |||||

| def forward(self, forward_input): | |||||

| audio, spect = forward_input | |||||

| audio = self.start(audio) | |||||

| for i in range(self.n_layers): | |||||

| acts = fused_add_tanh_sigmoid_multiply( | |||||

| self.in_layers[i](audio), | |||||

| self.cond_layers[i](spect), | |||||

| torch.IntTensor([self.n_channels])) | |||||

| res_skip_acts = self.res_skip_layers[i](acts) | |||||

| if i < self.n_layers - 1: | |||||

| audio = res_skip_acts[:, :self.n_channels, :] + audio | |||||

| skip_acts = res_skip_acts[:, self.n_channels:, :] | |||||

| else: | |||||

| skip_acts = res_skip_acts | |||||

| if i == 0: | |||||

| output = skip_acts | |||||

| else: | |||||

| output = skip_acts + output | |||||

| return self.end(output) | |||||

| class WaveGlow(torch.nn.Module): | |||||

| def __init__(self, n_mel_channels, n_flows, n_group, n_early_every, | |||||

| n_early_size, WN_config): | |||||

| super(WaveGlow, self).__init__() | |||||

| self.upsample = torch.nn.ConvTranspose1d(n_mel_channels, | |||||

| n_mel_channels, | |||||

| 1024, stride=256) | |||||

| assert(n_group % 2 == 0) | |||||

| self.n_flows = n_flows | |||||

| self.n_group = n_group | |||||

| self.n_early_every = n_early_every | |||||

| self.n_early_size = n_early_size | |||||

| self.WN = torch.nn.ModuleList() | |||||

| self.convinv = torch.nn.ModuleList() | |||||

| n_half = int(n_group/2) | |||||

| # Set up layers with the right sizes based on how many dimensions | |||||

| # have been output already | |||||

| n_remaining_channels = n_group | |||||

| for k in range(n_flows): | |||||

| if k % self.n_early_every == 0 and k > 0: | |||||

| n_half = n_half - int(self.n_early_size/2) | |||||

| n_remaining_channels = n_remaining_channels - self.n_early_size | |||||

| self.convinv.append(Invertible1x1Conv(n_remaining_channels)) | |||||

| self.WN.append(WN(n_half, n_mel_channels*n_group, **WN_config)) | |||||

| self.n_remaining_channels = n_remaining_channels # Useful during inference | |||||

| def forward(self, forward_input): | |||||

| """ | |||||

| forward_input[0] = mel_spectrogram: batch x n_mel_channels x frames | |||||

| forward_input[1] = audio: batch x time | |||||

| """ | |||||

| spect, audio = forward_input | |||||

| # Upsample spectrogram to size of audio | |||||

| spect = self.upsample(spect) | |||||

| assert(spect.size(2) >= audio.size(1)) | |||||

| if spect.size(2) > audio.size(1): | |||||

| spect = spect[:, :, :audio.size(1)] | |||||

| spect = spect.unfold(2, self.n_group, self.n_group).permute(0, 2, 1, 3) | |||||

| spect = spect.contiguous().view(spect.size(0), spect.size(1), -1).permute(0, 2, 1) | |||||

| audio = audio.unfold(1, self.n_group, self.n_group).permute(0, 2, 1) | |||||

| output_audio = [] | |||||

| log_s_list = [] | |||||

| log_det_W_list = [] | |||||

| for k in range(self.n_flows): | |||||

| if k % self.n_early_every == 0 and k > 0: | |||||

| output_audio.append(audio[:, :self.n_early_size, :]) | |||||

| audio = audio[:, self.n_early_size:, :] | |||||

| audio, log_det_W = self.convinv[k](audio) | |||||

| log_det_W_list.append(log_det_W) | |||||

| n_half = int(audio.size(1)/2) | |||||

| audio_0 = audio[:, :n_half, :] | |||||

| audio_1 = audio[:, n_half:, :] | |||||

| output = self.WN[k]((audio_0, spect)) | |||||

| log_s = output[:, n_half:, :] | |||||

| b = output[:, :n_half, :] | |||||

| audio_1 = torch.exp(log_s)*audio_1 + b | |||||

| log_s_list.append(log_s) | |||||

| audio = torch.cat([audio_0, audio_1], 1) | |||||

| output_audio.append(audio) | |||||

| return torch.cat(output_audio, 1), log_s_list, log_det_W_list | |||||

| def infer(self, spect, sigma=1.0): | |||||

| spect = self.upsample(spect) | |||||

| # trim conv artifacts. maybe pad spec to kernel multiple | |||||

| time_cutoff = self.upsample.kernel_size[0] - self.upsample.stride[0] | |||||

| spect = spect[:, :, :-time_cutoff] | |||||

| spect = spect.unfold(2, self.n_group, self.n_group).permute(0, 2, 1, 3) | |||||

| spect = spect.contiguous().view(spect.size(0), spect.size(1), -1).permute(0, 2, 1) | |||||

| if spect.type() == 'torch.cuda.HalfTensor': | |||||

| audio = torch.cuda.HalfTensor(spect.size(0), | |||||

| self.n_remaining_channels, | |||||

| spect.size(2)).normal_() | |||||

| else: | |||||

| audio = torch.cuda.FloatTensor(spect.size(0), | |||||

| self.n_remaining_channels, | |||||

| spect.size(2)).normal_() | |||||

| audio = torch.autograd.Variable(sigma*audio) | |||||

| for k in reversed(range(self.n_flows)): | |||||

| n_half = int(audio.size(1)/2) | |||||

| audio_0 = audio[:, :n_half, :] | |||||

| audio_1 = audio[:, n_half:, :] | |||||

| output = self.WN[k]((audio_0, spect)) | |||||

| s = output[:, n_half:, :] | |||||

| b = output[:, :n_half, :] | |||||

| audio_1 = (audio_1 - b)/torch.exp(s) | |||||

| audio = torch.cat([audio_0, audio_1], 1) | |||||

| audio = self.convinv[k](audio, reverse=True) | |||||

| if k % self.n_early_every == 0 and k > 0: | |||||

| if spect.type() == 'torch.cuda.HalfTensor': | |||||

| z = torch.cuda.HalfTensor(spect.size( | |||||

| 0), self.n_early_size, spect.size(2)).normal_() | |||||

| else: | |||||

| z = torch.cuda.FloatTensor(spect.size( | |||||

| 0), self.n_early_size, spect.size(2)).normal_() | |||||

| audio = torch.cat((sigma*z, audio), 1) | |||||

| audio = audio.permute(0, 2, 1).contiguous().view( | |||||

| audio.size(0), -1).data | |||||

| return audio | |||||

| @staticmethod | |||||

| def remove_weightnorm(model): | |||||

| waveglow = model | |||||

| for WN in waveglow.WN: | |||||

| WN.start = torch.nn.utils.remove_weight_norm(WN.start) | |||||

| WN.in_layers = remove(WN.in_layers) | |||||

| WN.cond_layers = remove(WN.cond_layers) | |||||

| WN.res_skip_layers = remove(WN.res_skip_layers) | |||||

| return waveglow | |||||

| def remove(conv_list): | |||||

| new_conv_list = torch.nn.ModuleList() | |||||

| for old_conv in conv_list: | |||||

| old_conv = torch.nn.utils.remove_weight_norm(old_conv) | |||||

| new_conv_list.append(old_conv) | |||||

| return new_conv_list | |||||

+ 52

- 0

FastSpeech/hparams.py

View File

| @ -0,0 +1,52 @@ | |||||

| from text import symbols | |||||

| # Text | |||||

| text_cleaners = ['english_cleaners'] | |||||

| # Mel | |||||

| n_mel_channels = 80 | |||||

| num_mels = 80 | |||||

| # FastSpeech | |||||

| vocab_size = 1024 | |||||

| N = 6 | |||||

| Head = 2 | |||||

| d_model = 384 | |||||

| duration_predictor_filter_size = 256 | |||||

| duration_predictor_kernel_size = 3 | |||||

| dropout = 0.1 | |||||

| word_vec_dim = 384 | |||||

| encoder_n_layer = 6 | |||||

| encoder_head = 2 | |||||

| encoder_conv1d_filter_size = 1536 | |||||

| max_sep_len = 2048 | |||||

| encoder_output_size = 384 | |||||

| decoder_n_layer = 6 | |||||

| decoder_head = 2 | |||||

| decoder_conv1d_filter_size = 1536 | |||||

| decoder_output_size = 384 | |||||

| fft_conv1d_kernel = 3 | |||||

| fft_conv1d_padding = 1 | |||||

| duration_predictor_filter_size = 256 | |||||

| duration_predictor_kernel_size = 3 | |||||

| dropout = 0.1 | |||||

| # Train | |||||

| alignment_path = "./alignments" | |||||

| checkpoint_path = "./model_new" | |||||

| logger_path = "./logger" | |||||

| mel_ground_truth = "./mels" | |||||

| batch_size = 64 | |||||

| epochs = 1000 | |||||

| n_warm_up_step = 4000 | |||||

| learning_rate = 1e-3 | |||||

| weight_decay = 1e-6 | |||||

| grad_clip_thresh = 1.0 | |||||

| decay_step = [500000, 1000000, 2000000] | |||||

| save_step = 1000 | |||||

| log_step = 5 | |||||

| clear_Time = 20 | |||||

BIN

FastSpeech/img/model.png

View File

BIN

FastSpeech/img/model_test.jpg

View File

BIN

FastSpeech/img/tacotron2_outputs.jpg

View File

+ 29

- 0

FastSpeech/loss.py

View File

| @ -0,0 +1,29 @@ | |||||

| import torch | |||||

| import torch.nn as nn | |||||

| class FastSpeechLoss(nn.Module): | |||||

| """ FastSPeech Loss """ | |||||

| def __init__(self): | |||||

| super(FastSpeechLoss, self).__init__() | |||||

| self.mse_loss = nn.MSELoss() | |||||

| self.l1_loss = nn.L1Loss() | |||||

| def forward(self, mel, mel_postnet, duration_predicted, mel_target, duration_predictor_target): | |||||

| mel_target.requires_grad = False | |||||

| mel_loss = self.mse_loss(mel, mel_target) | |||||

| mel_postnet_loss = self.mse_loss(mel_postnet, mel_target) | |||||

| duration_predictor_target.requires_grad = False | |||||

| # duration_predictor_target = duration_predictor_target + 1 | |||||

| # duration_predictor_target = torch.log( | |||||

| # duration_predictor_target.float()) | |||||

| # print(duration_predictor_target) | |||||

| # print(duration_predicted) | |||||

| duration_predictor_loss = self.l1_loss( | |||||

| duration_predicted, duration_predictor_target.float()) | |||||

| return mel_loss, mel_postnet_loss, duration_predictor_loss | |||||

+ 404

- 0

FastSpeech/modules.py

View File

| @ -0,0 +1,404 @@ | |||||

| import torch | |||||

| import torch.nn as nn | |||||

| import torch.nn.functional as F | |||||

| from collections import OrderedDict | |||||

| import numpy as np | |||||

| import copy | |||||

| import math | |||||

| import hparams as hp | |||||

| import utils | |||||

| device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') | |||||

| def get_sinusoid_encoding_table(n_position, d_hid, padding_idx=None): | |||||

| ''' Sinusoid position encoding table ''' | |||||

| def cal_angle(position, hid_idx): | |||||

| return position / np.power(10000, 2 * (hid_idx // 2) / d_hid) | |||||

| def get_posi_angle_vec(position): | |||||

| return [cal_angle(position, hid_j) for hid_j in range(d_hid)] | |||||

| sinusoid_table = np.array([get_posi_angle_vec(pos_i) | |||||

| for pos_i in range(n_position)]) | |||||

| sinusoid_table[:, 0::2] = np.sin(sinusoid_table[:, 0::2]) # dim 2i | |||||

| sinusoid_table[:, 1::2] = np.cos(sinusoid_table[:, 1::2]) # dim 2i+1 | |||||

| if padding_idx is not None: | |||||

| # zero vector for padding dimension | |||||

| sinusoid_table[padding_idx] = 0. | |||||

| return torch.FloatTensor(sinusoid_table) | |||||

| def clones(module, N): | |||||

| return nn.ModuleList([copy.deepcopy(module) for _ in range(N)]) | |||||

| class LengthRegulator(nn.Module): | |||||

| """ Length Regulator """ | |||||

| def __init__(self): | |||||

| super(LengthRegulator, self).__init__() | |||||

| self.duration_predictor = DurationPredictor() | |||||

| def LR(self, x, duration_predictor_output, alpha=1.0, mel_max_length=None): | |||||

| output = list() | |||||

| for batch, expand_target in zip(x, duration_predictor_output): | |||||

| output.append(self.expand(batch, expand_target, alpha)) | |||||

| if mel_max_length: | |||||

| output = utils.pad(output, mel_max_length) | |||||

| else: | |||||

| output = utils.pad(output) | |||||

| return output | |||||

| def expand(self, batch, predicted, alpha): | |||||

| out = list() | |||||

| for i, vec in enumerate(batch): | |||||

| expand_size = predicted[i].item() | |||||

| out.append(vec.expand(int(expand_size*alpha), -1)) | |||||

| out = torch.cat(out, 0) | |||||

| return out | |||||

| def rounding(self, num): | |||||

| if num - int(num) >= 0.5: | |||||

| return int(num) + 1 | |||||

| else: | |||||

| return int(num) | |||||

| def forward(self, x, alpha=1.0, target=None, mel_max_length=None): | |||||

| duration_predictor_output = self.duration_predictor(x) | |||||

| if self.training: | |||||

| output = self.LR(x, target, mel_max_length=mel_max_length) | |||||

| return output, duration_predictor_output | |||||

| else: | |||||

| for idx, ele in enumerate(duration_predictor_output[0]): | |||||

| duration_predictor_output[0][idx] = self.rounding(ele) | |||||

| output = self.LR(x, duration_predictor_output, alpha) | |||||

| mel_pos = torch.stack( | |||||

| [torch.Tensor([i+1 for i in range(output.size(1))])]).long().to(device) | |||||

| return output, mel_pos | |||||

| class DurationPredictor(nn.Module): | |||||

| """ Duration Predictor """ | |||||

| def __init__(self): | |||||

| super(DurationPredictor, self).__init__() | |||||

| self.input_size = hp.d_model | |||||

| self.filter_size = hp.duration_predictor_filter_size | |||||

| self.kernel = hp.duration_predictor_kernel_size | |||||

| self.conv_output_size = hp.duration_predictor_filter_size | |||||

| self.dropout = hp.dropout | |||||

| self.conv_layer = nn.Sequential(OrderedDict([ | |||||

| ("conv1d_1", Conv(self.input_size, | |||||

| self.filter_size, | |||||

| kernel_size=self.kernel, | |||||

| padding=1)), | |||||

| ("layer_norm_1", nn.LayerNorm(self.filter_size)), | |||||

| ("relu_1", nn.ReLU()), | |||||

| ("dropout_1", nn.Dropout(self.dropout)), | |||||

| ("conv1d_2", Conv(self.filter_size, | |||||

| self.filter_size, | |||||

| kernel_size=self.kernel, | |||||

| padding=1)), | |||||

| ("layer_norm_2", nn.LayerNorm(self.filter_size)), | |||||

| ("relu_2", nn.ReLU()), | |||||

| ("dropout_2", nn.Dropout(self.dropout)) | |||||

| ])) | |||||

| self.linear_layer = Linear(self.conv_output_size, 1) | |||||

| self.relu = nn.ReLU() | |||||

| def forward(self, encoder_output): | |||||

| out = self.conv_layer(encoder_output) | |||||

| out = self.linear_layer(out) | |||||

| out = self.relu(out) | |||||

| out = out.squeeze() | |||||

| if not self.training: | |||||

| out = out.unsqueeze(0) | |||||

| return out | |||||

| class Conv(nn.Module): | |||||

| """ | |||||

| Convolution Module | |||||

| """ | |||||

| def __init__(self, | |||||

| in_channels, | |||||

| out_channels, | |||||

| kernel_size=1, | |||||

| stride=1, | |||||

| padding=0, | |||||

| dilation=1, | |||||

| bias=True, | |||||

| w_init='linear'): | |||||

| """ | |||||

| :param in_channels: dimension of input | |||||

| :param out_channels: dimension of output | |||||

| :param kernel_size: size of kernel | |||||

| :param stride: size of stride | |||||

| :param padding: size of padding | |||||

| :param dilation: dilation rate | |||||

| :param bias: boolean. if True, bias is included. | |||||

| :param w_init: str. weight inits with xavier initialization. | |||||

| """ | |||||

| super(Conv, self).__init__() | |||||

| self.conv = nn.Conv1d(in_channels, | |||||

| out_channels, | |||||

| kernel_size=kernel_size, | |||||

| stride=stride, | |||||

| padding=padding, | |||||

| dilation=dilation, | |||||

| bias=bias) | |||||

| nn.init.xavier_uniform_( | |||||

| self.conv.weight, gain=nn.init.calculate_gain(w_init)) | |||||

| def forward(self, x): | |||||

| x = x.contiguous().transpose(1, 2) | |||||

| x = self.conv(x) | |||||

| x = x.contiguous().transpose(1, 2) | |||||

| return x | |||||

| class Linear(nn.Module): | |||||

| """ | |||||

| Linear Module | |||||

| """ | |||||

| def __init__(self, in_dim, out_dim, bias=True, w_init='linear'): | |||||

| """ | |||||

| :param in_dim: dimension of input | |||||

| :param out_dim: dimension of output | |||||

| :param bias: boolean. if True, bias is included. | |||||

| :param w_init: str. weight inits with xavier initialization. | |||||

| """ | |||||

| super(Linear, self).__init__() | |||||

| self.linear_layer = nn.Linear(in_dim, out_dim, bias=bias) | |||||

| nn.init.xavier_uniform_( | |||||

| self.linear_layer.weight, | |||||

| gain=nn.init.calculate_gain(w_init)) | |||||

| def forward(self, x): | |||||

| return self.linear_layer(x) | |||||

| class FFN(nn.Module): | |||||

| """ | |||||

| Positionwise Feed-Forward Network | |||||

| """ | |||||

| def __init__(self, num_hidden): | |||||

| """ | |||||

| :param num_hidden: dimension of hidden | |||||

| """ | |||||

| super(FFN, self).__init__() | |||||

| self.w_1 = Conv(num_hidden, num_hidden * 4, | |||||

| kernel_size=3, padding=1, w_init='relu') | |||||

| self.w_2 = Conv(num_hidden * 4, num_hidden, kernel_size=3, padding=1) | |||||

| self.dropout = nn.Dropout(p=0.1) | |||||

| self.layer_norm = nn.LayerNorm(num_hidden) | |||||

| def forward(self, input_): | |||||

| # FFN Network | |||||

| x = input_ | |||||

| x = self.w_2(torch.relu(self.w_1(x))) | |||||

| # residual connection | |||||

| x = x + input_ | |||||

| # dropout | |||||

| x = self.dropout(x) | |||||

| # layer normalization | |||||

| x = self.layer_norm(x) | |||||

| return x | |||||

| class MultiheadAttention(nn.Module): | |||||

| """ | |||||

| Multihead attention mechanism (dot attention) | |||||

| """ | |||||

| def __init__(self, num_hidden_k): | |||||

| """ | |||||

| :param num_hidden_k: dimension of hidden | |||||

| """ | |||||

| super(MultiheadAttention, self).__init__() | |||||

| self.num_hidden_k = num_hidden_k | |||||

| self.attn_dropout = nn.Dropout(p=0.1) | |||||

| def forward(self, key, value, query, mask=None, query_mask=None): | |||||

| # Get attention score | |||||

| attn = torch.bmm(query, key.transpose(1, 2)) | |||||

| attn = attn / math.sqrt(self.num_hidden_k) | |||||

| # Masking to ignore padding (key side) | |||||

| if mask is not None: | |||||

| attn = attn.masked_fill(mask, -2 ** 32 + 1) | |||||

| attn = torch.softmax(attn, dim=-1) | |||||

| else: | |||||

| attn = torch.softmax(attn, dim=-1) | |||||

| # Masking to ignore padding (query side) | |||||

| if query_mask is not None: | |||||

| attn = attn * query_mask | |||||

| # Dropout | |||||

| attn = self.attn_dropout(attn) | |||||

| # Get Context Vector | |||||

| result = torch.bmm(attn, value) | |||||

| return result, attn | |||||

| class Attention(nn.Module): | |||||

| """ | |||||

| Attention Network | |||||

| """ | |||||

| def __init__(self, num_hidden, h=2): | |||||

| """ | |||||

| :param num_hidden: dimension of hidden | |||||

| :param h: num of heads | |||||

| """ | |||||

| super(Attention, self).__init__() | |||||

| self.num_hidden = num_hidden | |||||

| self.num_hidden_per_attn = num_hidden // h | |||||

| self.h = h | |||||

| self.key = Linear(num_hidden, num_hidden, bias=False) | |||||

| self.value = Linear(num_hidden, num_hidden, bias=False) | |||||

| self.query = Linear(num_hidden, num_hidden, bias=False) | |||||

| self.multihead = MultiheadAttention(self.num_hidden_per_attn) | |||||

| self.residual_dropout = nn.Dropout(p=0.1) | |||||

| self.final_linear = Linear(num_hidden * 2, num_hidden) | |||||

| self.layer_norm_1 = nn.LayerNorm(num_hidden) | |||||

| def forward(self, memory, decoder_input, mask=None, query_mask=None): | |||||

| batch_size = memory.size(0) | |||||

| seq_k = memory.size(1) | |||||

| seq_q = decoder_input.size(1) | |||||

| # Repeat masks h times | |||||

| if query_mask is not None: | |||||

| query_mask = query_mask.unsqueeze(-1).repeat(1, 1, seq_k) | |||||

| query_mask = query_mask.repeat(self.h, 1, 1) | |||||

| if mask is not None: | |||||

| mask = mask.repeat(self.h, 1, 1) | |||||

| # Make multihead | |||||

| key = self.key(memory).view(batch_size, | |||||

| seq_k, | |||||

| self.h, | |||||

| self.num_hidden_per_attn) | |||||

| value = self.value(memory).view(batch_size, | |||||

| seq_k, | |||||

| self.h, | |||||

| self.num_hidden_per_attn) | |||||

| query = self.query(decoder_input).view(batch_size, | |||||

| seq_q, | |||||

| self.h, | |||||

| self.num_hidden_per_attn) | |||||

| key = key.permute(2, 0, 1, 3).contiguous().view(-1, | |||||

| seq_k, | |||||

| self.num_hidden_per_attn) | |||||

| value = value.permute(2, 0, 1, 3).contiguous().view(-1, | |||||

| seq_k, | |||||

| self.num_hidden_per_attn) | |||||

| query = query.permute(2, 0, 1, 3).contiguous().view(-1, | |||||

| seq_q, | |||||

| self.num_hidden_per_attn) | |||||

| # Get context vector | |||||

| result, attns = self.multihead( | |||||

| key, value, query, mask=mask, query_mask=query_mask) | |||||

| # Concatenate all multihead context vector | |||||

| result = result.view(self.h, batch_size, seq_q, | |||||

| self.num_hidden_per_attn) | |||||

| result = result.permute(1, 2, 0, 3).contiguous().view( | |||||

| batch_size, seq_q, -1) | |||||

| # Concatenate context vector with input (most important) | |||||

| result = torch.cat([decoder_input, result], dim=-1) | |||||

| # Final linear | |||||

| result = self.final_linear(result) | |||||

| # Residual dropout & connection | |||||

| result = self.residual_dropout(result) | |||||

| result = result + decoder_input | |||||

| # Layer normalization | |||||

| result = self.layer_norm_1(result) | |||||

| return result, attns | |||||

| class FFTBlock(torch.nn.Module): | |||||

| """FFT Block""" | |||||

| def __init__(self, | |||||

| d_model, | |||||

| n_head=hp.Head): | |||||

| super(FFTBlock, self).__init__() | |||||

| self.slf_attn = clones(Attention(d_model), hp.N) | |||||

| self.pos_ffn = clones(FFN(d_model), hp.N) | |||||

| self.pos_emb = nn.Embedding.from_pretrained(get_sinusoid_encoding_table(1024, | |||||

| d_model, | |||||

| padding_idx=0), freeze=True) | |||||

| def forward(self, x, pos, return_attns=False): | |||||

| # Get character mask | |||||

| if self.training: | |||||

| c_mask = pos.ne(0).type(torch.float) | |||||

| mask = pos.eq(0).unsqueeze(1).repeat(1, x.size(1), 1) | |||||

| else: | |||||

| c_mask, mask = None, None | |||||

| # Get positional embedding, apply alpha and add | |||||

| pos = self.pos_emb(pos) | |||||

| x = x + pos | |||||

| # Attention encoder-encoder | |||||

| attns = list() | |||||

| for slf_attn, ffn in zip(self.slf_attn, self.pos_ffn): | |||||

| x, attn = slf_attn(x, x, mask=mask, query_mask=c_mask) | |||||

| x = ffn(x) | |||||

| attns.append(attn) | |||||

| return x, attns | |||||

+ 44

- 0

FastSpeech/optimizer.py

View File

| @ -0,0 +1,44 @@ | |||||

| import numpy as np | |||||

| class ScheduledOptim(): | |||||

| ''' A simple wrapper class for learning rate scheduling ''' | |||||

| def __init__(self, optimizer, d_model, n_warmup_steps, current_steps): | |||||

| self._optimizer = optimizer | |||||

| self.n_warmup_steps = n_warmup_steps | |||||

| self.n_current_steps = current_steps | |||||

| self.init_lr = np.power(d_model, -0.5) | |||||

| def step_and_update_lr_frozen(self, learning_rate_frozen): | |||||

| for param_group in self._optimizer.param_groups: | |||||

| param_group['lr'] = learning_rate_frozen | |||||

| self._optimizer.step() | |||||

| def step_and_update_lr(self): | |||||

| self._update_learning_rate() | |||||

| self._optimizer.step() | |||||

| def get_learning_rate(self): | |||||

| learning_rate = 0.0 | |||||

| for param_group in self._optimizer.param_groups: | |||||

| learning_rate = param_group['lr'] | |||||

| return learning_rate | |||||

| def zero_grad(self): | |||||

| # print(self.init_lr) | |||||

| self._optimizer.zero_grad() | |||||

| def _get_lr_scale(self): | |||||

| return np.min([ | |||||

| np.power(self.n_current_steps, -0.5), | |||||

| np.power(self.n_warmup_steps, -1.5) * self.n_current_steps]) | |||||

| def _update_learning_rate(self): | |||||

| ''' Learning rate scheduling per step ''' | |||||

| self.n_current_steps += 1 | |||||

| lr = self.init_lr * self._get_lr_scale() | |||||

| for param_group in self._optimizer.param_groups: | |||||

| param_group['lr'] = lr | |||||

+ 61

- 0

FastSpeech/preprocess.py

View File

| @ -0,0 +1,61 @@ | |||||

| import torch | |||||

| import numpy as np | |||||

| import shutil | |||||

| import os | |||||

| from utils import load_data, get_Tacotron2, get_WaveGlow | |||||

| from utils import process_text, load_data | |||||

| from data import ljspeech | |||||

| import hparams as hp | |||||

| import waveglow | |||||

| import audio as Audio | |||||

| def preprocess_ljspeech(filename): | |||||

| in_dir = filename | |||||

| out_dir = hp.mel_ground_truth | |||||

| if not os.path.exists(out_dir): | |||||

| os.makedirs(out_dir, exist_ok=True) | |||||

| metadata = ljspeech.build_from_path(in_dir, out_dir) | |||||

| write_metadata(metadata, out_dir) | |||||

| shutil.move(os.path.join(hp.mel_ground_truth, "train.txt"), | |||||

| os.path.join("data", "train.txt")) | |||||

| def write_metadata(metadata, out_dir): | |||||

| with open(os.path.join(out_dir, 'train.txt'), 'w', encoding='utf-8') as f: | |||||

| for m in metadata: | |||||

| f.write(m + '\n') | |||||

| def main(): | |||||

| path = os.path.join("data", "LJSpeech-1.1") | |||||

| preprocess_ljspeech(path) | |||||

| text_path = os.path.join("data", "train.txt") | |||||

| texts = process_text(text_path) | |||||

| if not os.path.exists(hp.alignment_path): | |||||

| os.mkdir(hp.alignment_path) | |||||

| else: | |||||

| return | |||||

| tacotron2 = get_Tacotron2() | |||||

| num = 0 | |||||

| for ind, text in enumerate(texts[num:]): | |||||

| print(ind) | |||||

| character = text[0:len(text)-1] | |||||

| mel_gt_name = os.path.join( | |||||

| hp.mel_ground_truth, "ljspeech-mel-%05d.npy" % (ind+num+1)) | |||||

| mel_gt_target = np.load(mel_gt_name) | |||||

| _, _, D = load_data(character, mel_gt_target, tacotron2) | |||||

| np.save(os.path.join(hp.alignment_path, str( | |||||

| ind+num) + ".npy"), D, allow_pickle=False) | |||||

| if __name__ == "__main__": | |||||

| main() | |||||

BIN

FastSpeech/results/0.wav

View File

BIN

FastSpeech/results/1.wav

View File

BIN

FastSpeech/results/2.wav

View File

+ 74

- 0

FastSpeech/synthesis.py

View File

| @ -0,0 +1,74 @@ | |||||

| import torch | |||||

| import torch.nn as nn | |||||

| import matplotlib | |||||

| import matplotlib.pyplot as plt | |||||

| import numpy as np | |||||

| import time | |||||

| import os | |||||

| from fastspeech import FastSpeech | |||||

| from text import text_to_sequence | |||||

| import hparams as hp | |||||

| import utils | |||||

| import audio as Audio | |||||

| import glow | |||||

| import waveglow | |||||

| device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') | |||||

| def get_FastSpeech(num): | |||||

| checkpoint_path = "checkpoint_" + str(num) + ".pth.tar" | |||||

| model = nn.DataParallel(FastSpeech()).to(device) | |||||

| model.load_state_dict(torch.load(os.path.join( | |||||

| hp.checkpoint_path, checkpoint_path))['model']) | |||||

| model.eval() | |||||

| return model | |||||

| def synthesis(model, text, alpha=1.0): | |||||

| text = np.array(text_to_sequence(text, hp.text_cleaners)) | |||||

| text = np.stack([text]) | |||||

| src_pos = np.array([i+1 for i in range(text.shape[1])]) | |||||

| src_pos = np.stack([src_pos]) | |||||

| with torch.no_grad(): | |||||

| sequence = torch.autograd.Variable( | |||||

| torch.from_numpy(text)).cuda().long() | |||||

| src_pos = torch.autograd.Variable( | |||||

| torch.from_numpy(src_pos)).cuda().long() | |||||

| mel, mel_postnet = model.module.forward(sequence, src_pos, alpha=alpha) | |||||

| return mel[0].cpu().transpose(0, 1), \ | |||||

| mel_postnet[0].cpu().transpose(0, 1), \ | |||||

| mel.transpose(1, 2), \ | |||||

| mel_postnet.transpose(1, 2) | |||||

| if __name__ == "__main__": | |||||

| # Test | |||||

| num = 112000 | |||||

| alpha = 1.0 | |||||

| model = get_FastSpeech(num) | |||||

| words = "Let’s go out to the airport. The plane landed ten minutes ago." | |||||

| mel, mel_postnet, mel_torch, mel_postnet_torch = synthesis( | |||||

| model, words, alpha=alpha) | |||||

| if not os.path.exists("results"): | |||||

| os.mkdir("results") | |||||

| Audio.tools.inv_mel_spec(mel_postnet, os.path.join( | |||||

| "results", words + "_" + str(num) + "_griffin_lim.wav")) | |||||

| wave_glow = utils.get_WaveGlow() | |||||

| waveglow.inference.inference(mel_postnet_torch, wave_glow, os.path.join( | |||||

| "results", words + "_" + str(num) + "_waveglow.wav")) | |||||

| tacotron2 = utils.get_Tacotron2() | |||||

| mel_tac2, _, _ = utils.load_data_from_tacotron2(words, tacotron2) | |||||

| waveglow.inference.inference(torch.stack([torch.from_numpy( | |||||

| mel_tac2).cuda()]), wave_glow, os.path.join("results", "tacotron2.wav")) | |||||

| utils.plot_data([mel.numpy(), mel_postnet.numpy(), mel_tac2]) | |||||

+ 3

- 0

FastSpeech/tacotron2/__init__.py

View File

| @ -0,0 +1,3 @@ | |||||

| import tacotron2.hparams | |||||

| import tacotron2.model | |||||

| import tacotron2.layers | |||||

+ 92

- 0

FastSpeech/tacotron2/hparams.py

View File

| @ -0,0 +1,92 @@ | |||||

| from text import symbols | |||||

| class Hparams: | |||||

| """ hyper parameters """ | |||||

| def __init__(self): | |||||

| ################################ | |||||

| # Experiment Parameters # | |||||

| ################################ | |||||

| self.epochs = 500 | |||||

| self.iters_per_checkpoint = 1000 | |||||

| self.seed = 1234 | |||||

| self.dynamic_loss_scaling = True | |||||

| self.fp16_run = False | |||||

| self.distributed_run = False | |||||

| self.dist_backend = "nccl" | |||||

| self.dist_url = "tcp://localhost:54321" | |||||

| self.cudnn_enabled = True | |||||

| self.cudnn_benchmark = False | |||||

| self.ignore_layers = ['embedding.weight'] | |||||

| ################################ | |||||

| # Data Parameters # | |||||

| ################################ | |||||

| self.load_mel_from_disk = False | |||||

| self.training_files = 'filelists/ljs_audio_text_train_filelist.txt' | |||||

| self.validation_files = 'filelists/ljs_audio_text_val_filelist.txt' | |||||

| self.text_cleaners = ['english_cleaners'] | |||||

| ################################ | |||||

| # Audio Parameters # | |||||

| ################################ | |||||

| self.max_wav_value = 32768.0 | |||||

| self.sampling_rate = 22050 | |||||

| self.filter_length = 1024 | |||||

| self.hop_length = 256 | |||||

| self.win_length = 1024 | |||||

| self.n_mel_channels = 80 | |||||

| self.mel_fmin = 0.0 | |||||

| self.mel_fmax = 8000.0 | |||||

| ################################ | |||||

| # Model Parameters # | |||||

| ################################ | |||||

| self.n_symbols = len(symbols) | |||||

| self.symbols_embedding_dim = 512 | |||||

| # Encoder parameters | |||||

| self.encoder_kernel_size = 5 | |||||

| self.encoder_n_convolutions = 3 | |||||

| self.encoder_embedding_dim = 512 | |||||

| # Decoder parameters | |||||

| self.n_frames_per_step = 1 # currently only 1 is supported | |||||

| self.decoder_rnn_dim = 1024 | |||||

| self.prenet_dim = 256 | |||||

| self.max_decoder_steps = 1000 | |||||

| self.gate_threshold = 0.5 | |||||

| self.p_attention_dropout = 0.1 | |||||

| self.p_decoder_dropout = 0.1 | |||||

| # Attention parameters | |||||

| self.attention_rnn_dim = 1024 | |||||

| self.attention_dim = 128 | |||||

| # Location Layer parameters | |||||

| self.attention_location_n_filters = 32 | |||||

| self.attention_location_kernel_size = 31 | |||||

| # Mel-post processing network parameters | |||||

| self.postnet_embedding_dim = 512 | |||||

| self.postnet_kernel_size = 5 | |||||

| self.postnet_n_convolutions = 5 | |||||

| ################################ | |||||

| # Optimization Hyperparameters # | |||||

| ################################ | |||||

| self.use_saved_learning_rate = False | |||||

| self.learning_rate = 1e-3 | |||||

| self.weight_decay = 1e-6 | |||||

| self.grad_clip_thresh = 1.0 | |||||

| self.batch_size = 64 | |||||

| self.mask_padding = True # set model's padded outputs to padded values | |||||

| def return_self(self): | |||||

| return self | |||||

| def create_hparams(): | |||||

| hparams = Hparams() | |||||

| return hparams.return_self() | |||||

+ 36

- 0

FastSpeech/tacotron2/layers.py

View File

| @ -0,0 +1,36 @@ | |||||

| import torch | |||||

| from librosa.filters import mel as librosa_mel_fn | |||||

| class LinearNorm(torch.nn.Module): | |||||

| def __init__(self, in_dim, out_dim, bias=True, w_init_gain='linear'): | |||||

| super(LinearNorm, self).__init__() | |||||

| self.linear_layer = torch.nn.Linear(in_dim, out_dim, bias=bias) | |||||

| torch.nn.init.xavier_uniform_( | |||||

| self.linear_layer.weight, | |||||

| gain=torch.nn.init.calculate_gain(w_init_gain)) | |||||

| def forward(self, x): | |||||

| return self.linear_layer(x) | |||||

| class ConvNorm(torch.nn.Module): | |||||

| def __init__(self, in_channels, out_channels, kernel_size=1, stride=1, | |||||

| padding=None, dilation=1, bias=True, w_init_gain='linear'): | |||||

| super(ConvNorm, self).__init__() | |||||

| if padding is None: | |||||

| assert(kernel_size % 2 == 1) | |||||

| padding = int(dilation * (kernel_size - 1) / 2) | |||||

| self.conv = torch.nn.Conv1d(in_channels, out_channels, | |||||

| kernel_size=kernel_size, stride=stride, | |||||

| padding=padding, dilation=dilation, | |||||

| bias=bias) | |||||

| torch.nn.init.xavier_uniform_( | |||||

| self.conv.weight, gain=torch.nn.init.calculate_gain(w_init_gain)) | |||||

| def forward(self, signal): | |||||

| conv_signal = self.conv(signal) | |||||

| return conv_signal | |||||

+ 533

- 0

FastSpeech/tacotron2/model.py

View File

| @ -0,0 +1,533 @@ | |||||

| from math import sqrt | |||||

| import torch | |||||

| from torch.autograd import Variable | |||||

| from torch import nn | |||||

| from torch.nn import functional as F | |||||

| from tacotron2.layers import ConvNorm, LinearNorm | |||||

| from tacotron2.utils import to_gpu, get_mask_from_lengths | |||||

| class LocationLayer(nn.Module): | |||||

| def __init__(self, attention_n_filters, attention_kernel_size, | |||||

| attention_dim): | |||||

| super(LocationLayer, self).__init__() | |||||

| padding = int((attention_kernel_size - 1) / 2) | |||||

| self.location_conv = ConvNorm(2, attention_n_filters, | |||||

| kernel_size=attention_kernel_size, | |||||